In a rapidly evolving digital landscape, where AI is reshaping how we engage with information, a new promotion strategy is emerging – content seeding for large language models (LLMs). While we once optimized websites for Google and Yandex, today we must consider how to appear in the responses of ChatGPT, Gemini, and other AI systems.

This article answers the question "what is a large language model and how does it work", exploring how LLMs select information sources and which seeding strategies can help your content make it into their knowledge bases. You'll learn not only the theory but also practical steps for successful content seeding – from choosing platforms to crafting materials that are more likely to be featured in AI responses.

Introduction to Large Language Models (LLMs) and Content Seeding

A Beginner's Guide to LLMs

What is seeding in the context of LLMs? Simply put, a large language model is a sophisticated neural network trained on vast text datasets to understand and generate human-like language. Unlike simpler algorithms, LLMs rely on deep learning and the ability to grasp context and meaning in sentences, making them powerful tools for answering complex queries.

Modern LLMs, such as GPT-4, Claude, or Gemini, boast billions of parameters – adjustable "nodes" in their neural networks. This enables them to process intricate requests, hold conversations, and generate coherent text on diverse topics, as explained in this large language models tutorial.

How does an LLM work in simplified terms? It analyzes input text, matches it against its "knowledge base" (the data it was trained on), and generates the most likely response or continuation. Rather than merely searching for similar text, the model "understands" the query's essence and crafts a unique response, a process central to understanding LLMs.

Notably, most LLMs don't have real-time internet access (though some, like ChatGPT with its Browse feature, do). They rely on knowledge acquired during training, so their information is limited to the date of their last update, which is critical when considering how long does seeding take.

How LLMs Are Trained: Data, Architecture, and Fine-Tuning

How to learn large language models begins with understanding their training process, which involves several key stages:

- Data Collection. Modern LLMs are trained on petabytes of text – books, articles, web pages, academic papers, social media posts, and more. For instance, Common Crawl, a primary data source, contains over 100 billion web pages.

- Preprocessing. Collected texts are cleaned of errors, duplicates, and low-quality content, then labelled for training.

- Pre-training. The model learns to predict the next word in a sentence or fill in text gaps. This computationally intensive process requires thousands of GPUs and millions of dollars.

- Fine-Tuning. Post-pre-training, the model is further trained on specific tasks, like dialogue or code generation, to enhance its utility.

- RLHF (Reinforcement Learning from Human Feedback). Advanced models undergo additional training with human feedback to improve response quality, safety, and accuracy.

The architecture of modern LLMs is based on transformers, introduced by Google in 2017. The key innovation is the attention mechanism, which allows the model to weigh relationships between all words in a sentence, not just nearby ones, a cornerstone of any LLM tutorial for beginners.

Training an LLM from scratch is a complex, costly process, accessible only to large organizations. Estimates suggest GPT-4's training cost exceeded $100 million. As a result, most companies and developers use pre-trained models, adapting them for specific needs, a key aspect of how to use LLMs.

Why AI Selects Certain Sources

When exploring how to use large language models for business, it's crucial to understand how they prioritize information. Unlike traditional search engines, LLMs don't rely on explicit site rankings or link metrics. However, they still "favor" specific sources when generating responses, impacting LLM ranking.

Key criteria for source reliability include:

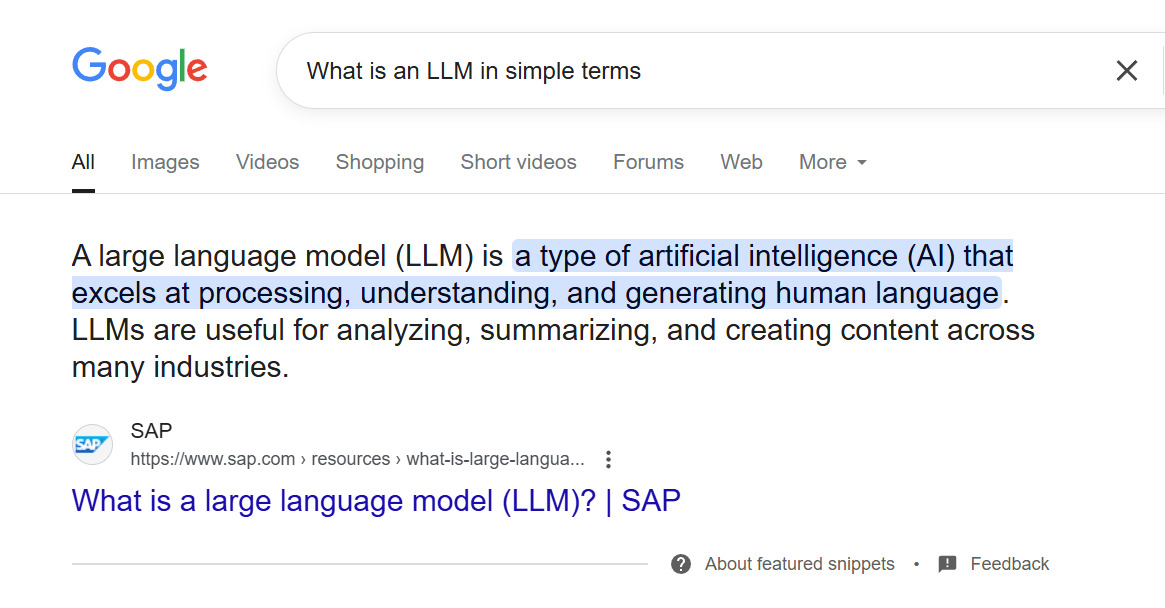

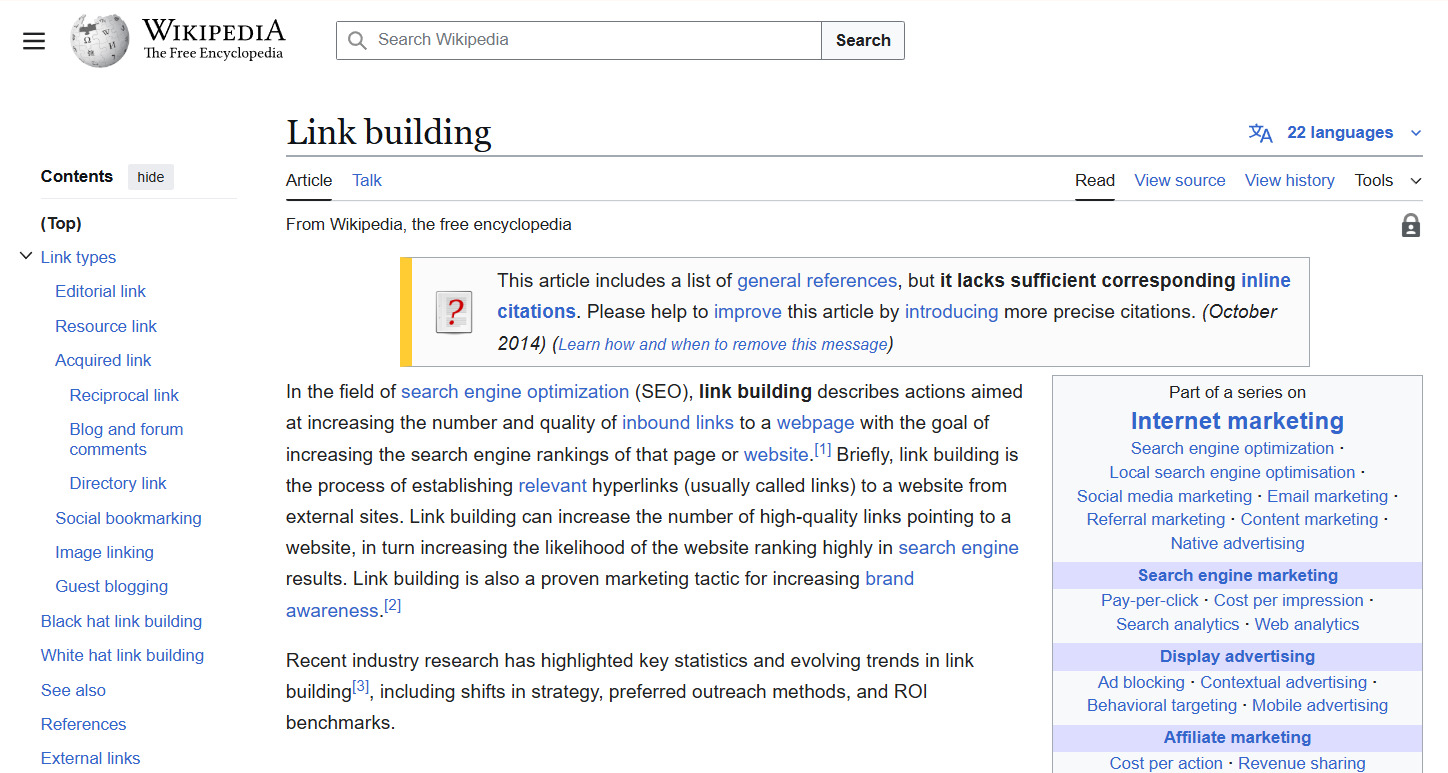

- Platform Authority. Content from Wikipedia, major news outlets, and academic journals is often included in training data and deemed more trustworthy.

- Formatting and Structure. Well-organized content with clear headings, lists, and highlighted key points is better processed during training.

- Depth and Completeness. Detailed explanations with examples and context outperform superficial content.

- Citability. Frequently cited content is more likely to appear in LLM responses.

- Timeliness. While most models update infrequently, fresher content has an edge when other factors are equal.

- Uniqueness and Originality. LLMs learn to distinguish original content from duplicates or rewrites.

- Consistency with Other Sources. Information corroborated by multiple authoritative sources carries more weight.

These criteria aren't explicitly programmed but emerge from the training process and the neural network's weighting system, a critical insight for LLM SEO.

How Content Seeding for LLMs Works

Content seeding for AI involves strategically placing content on platforms likely to be included in LLMs' training datasets. What is seeding? It's the process of creating and distributing information to become part of an AI's "knowledge" and appear in responses to relevant queries.

To achieve this, you need to:

- Identify Authoritative Platforms, such as Wikipedia, GitHub, Stack Overflow, or Medium, which are regularly crawled for training data.

- Create High-Quality Content that aligns with these platforms' formats and requirements.

- Ensure Uniqueness and Value, making your content stand out among billions of texts.

- Gain Community Validation through likes, comments, or other engagement forms.

Seeding for LLMs differs significantly from traditional SEO. While search engines prioritize keywords, meta tags, and backlinks, LLMs value semantic depth, structure, and source authority, a key distinction in how to learn LLM.

Technical Aspects of Integration into AI Responses

To grasp how to make a large language model source your content, you must understand how LLMs process information. Unlike search engines that index sites in real time, LLMs rely on pre-collected, processed datasets.

The process of information use in models like ChatGPT includes:

- Data Collection. Developers (e.g., OpenAI, Google, Anthropic) gather vast text arrays from public sources – web pages, books, articles, and forums.

- Tokenization and Vectorization. Texts are broken into tokens (word fragments or whole words) and converted into numerical vectors, representing words in multidimensional space.

- Training. The model learns to predict the next token based on prior context, forming an "understanding" of relationships between concepts and facts.

- Knowledge Storage. Unlike databases, LLMs don't store information explicitly; knowledge is embedded in billions of neural network parameters.

- Response Generation. When a user asks a question, the model generates a response based on statistical patterns learned during training, not by searching a database.

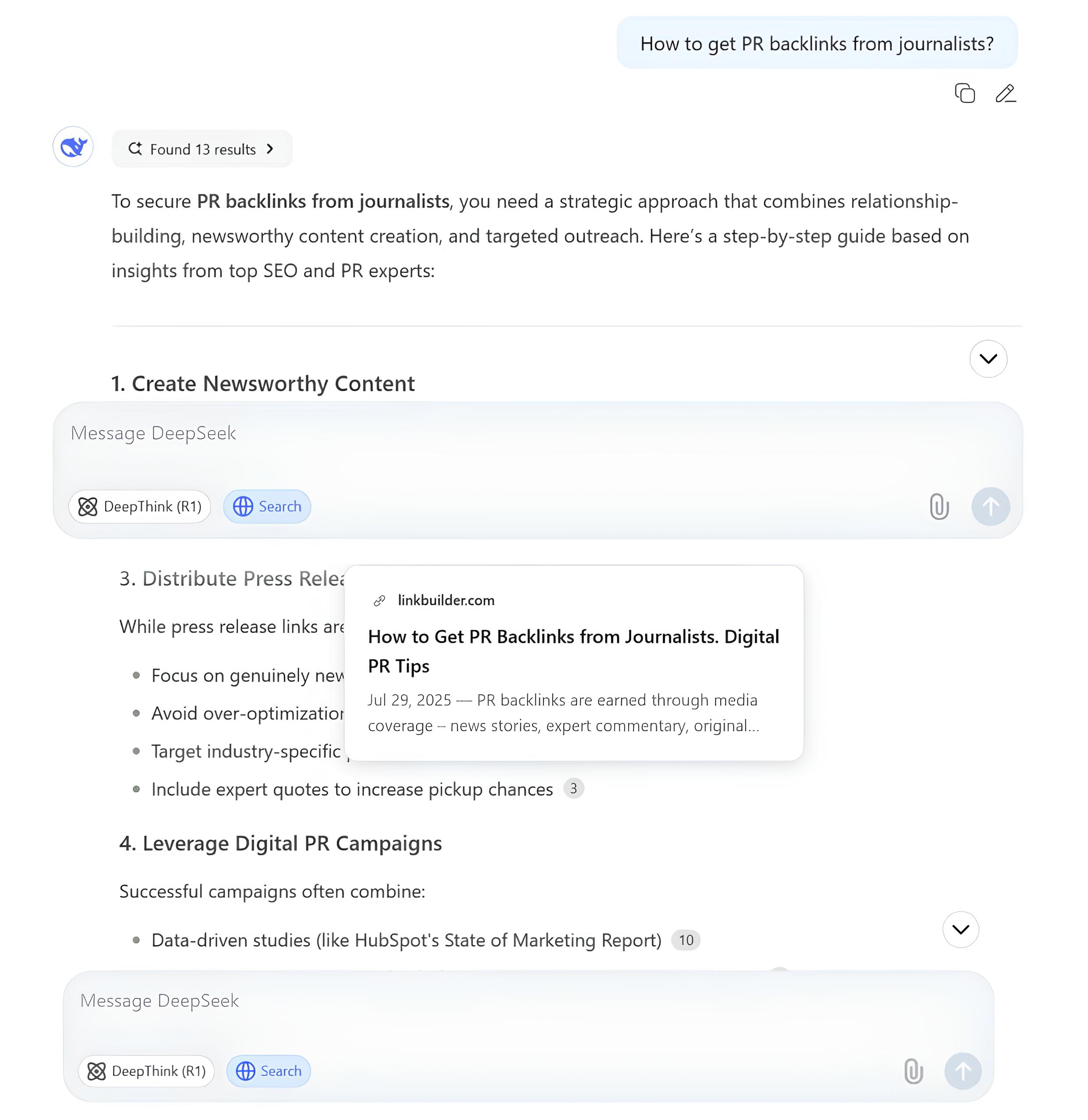

Newer LLM-based services may access the internet in real time, creating additional opportunities for your content to be discovered and used, a factor to consider in how does LLM work.

Training data sources for LLMs include:

- Common Crawl – the largest open internet archive, containing petabytes of data from billions of web pages. If your site is indexed by search engines, it's likely in Common Crawl.

- Wikipedia – a critical source of structured knowledge for LLMs, often used for factual responses.

- Books Corpus – a collection of thousands of books, aiding in understanding long-form texts and narratives.

- WebText/OpenWebText – curated web content collections, often including highly rated posts from platforms like Reddit.

- GitHub – a primary source for code-related models.

- Stack Exchange/Stack Overflow – key for technical questions and answers.

- Academic Publications – used for specialized models requiring scholarly knowledge.

To increase your content's chances of inclusion in future LLM training datasets, focus on these platforms. Presence across multiple key platforms amplifies your content's "signal" of importance, a core seeding strategy.

Key Stages of Seeding: From Creation to Indexing

Content Preparation (Optimizing for Query Semantics)

Unlike traditional SEO, LLM SEO emphasizes not just keywords but the semantic field – a set of related concepts and terms.

Here's a step-by-step process for preparing content for LLMs:

- Semantic Core Research

- Identify primary queries your content should address.

- Expand with related terms, synonyms, and variations.

- Include industry-specific terms to showcase expertise.

- Information Structuring

- Use a clear hierarchy of headings (H1, H2, H3).

- Break text into logical blocks with subheadings.

- Use bulleted and numbered lists for enumerations.

- Create tables for data comparisons.

- Content Optimization

- Start with a direct answer to the main question (crucial for featured snippets and LLM responses).

- Use factual data: numbers, dates, names, and statistics.

- Support claims with links to authoritative sources.

- Include unique examples and case studies not found elsewhere.

- Completeness and Accuracy Check

- Ensure content addresses all aspects of the main question.

- Conduct fact-checking for all information.

- Include diverse perspectives for complex topics.

Placement on High-Priority Platforms for LLMs

After creating optimized content, the next step is strategic placement. How to create LLM-relevant content? The key is diversifying your presence on authoritative platforms.

Why this matters:

- Amplification Effect – Information appearing across multiple authoritative platforms is more likely to be deemed reliable by LLMs.

- Cross-Validation – Models often verify information by cross-referencing multiple sources.

- Increased Reach – Different models may prioritize different data sources.

Your placement strategy should include:

- Platform Prioritization – Start with the most authoritative resources in your industry. For technical topics, this might be GitHub or Stack Overflow; for academic content, arXiv or ResearchGate; for general topics, Wikipedia or major media.

- Content Adaptation – Tailor the same material to each platform's format. What works on Medium may not suit Reddit or Wikipedia.

- Sequential Placement – Begin with one platform, then reference this content when posting on others, creating a network of interlinked materials.

- Community Engagement – Actively participate in discussions, respond to comments, and supplement information to boost visibility and relevance.

Benefits of Seeding Strategy for Businesses and Experts

Boosting Visibility in the AI Search Era

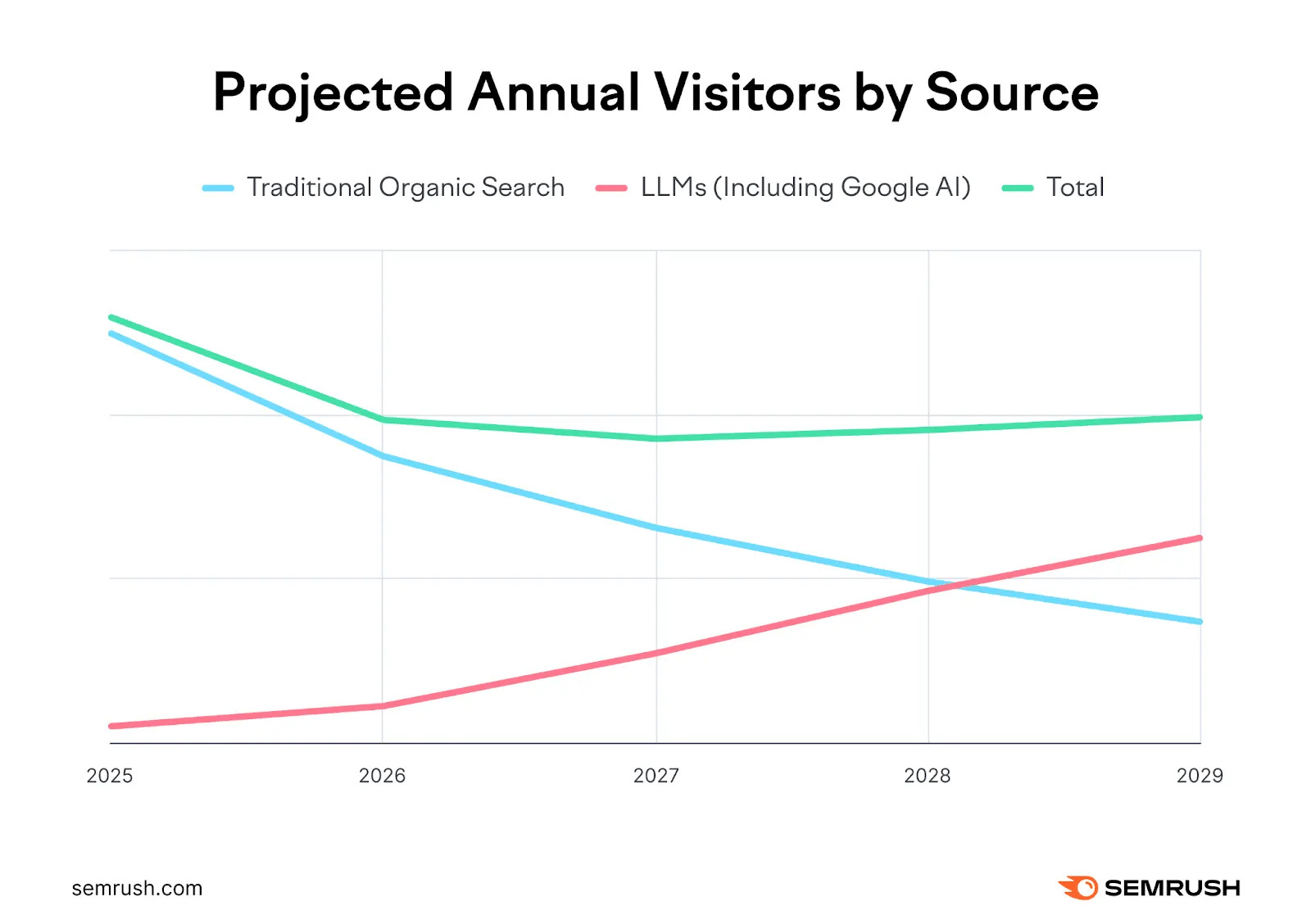

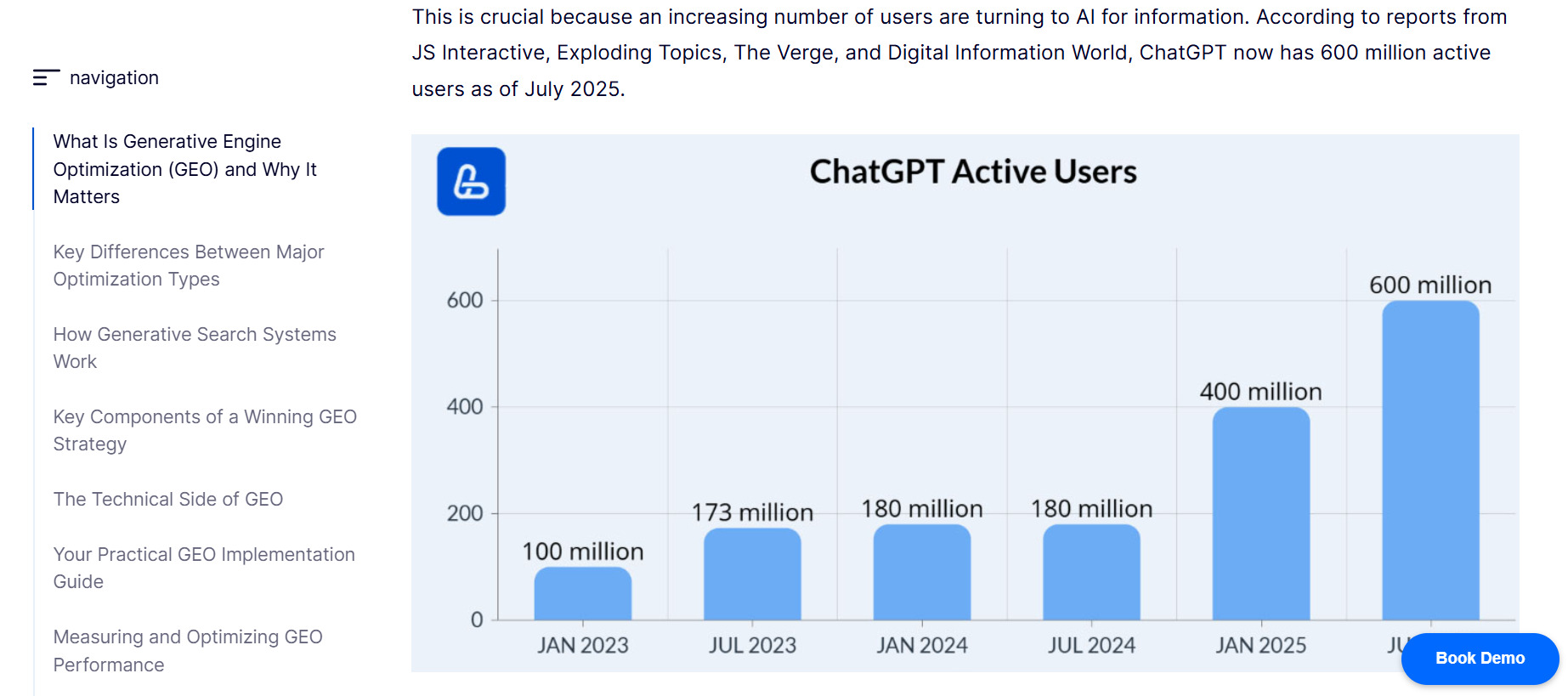

AI-powered search is gradually replacing traditional search engines. According to Gartner's research, search query traffic is expected to drop by 25% by 2026. Meanwhile, Semrush predicts that by 2028, AI search users will outnumber those using traditional search engines.

Key benefits of seeding strategies include:

- New Audience Channel. When your brand or expertise is cited in responses from ChatGPT or similar systems, you reach audiences who may not actively search for you.

- Strengthening Brand Authority. Being cited by AI as an expert instantly boosts trust in your brand, as users perceive AI-provided information as objective and verified.

- 24/7 Passive Marketing. Once seeded, your content works continuously, answering user queries even when you're offline, scaling your expertise without additional costs.

- Early-Stage Funnel Reach. Users often turn to AI during the problem-research phase, before forming specific solution queries. Appearing in these responses helps shape demand for your product.

- Bypassing Ad Blockers. Unlike traditional ads, AI response mentions aren't blocked and are perceived as organic content.

How Content Seeding Replaces Traditional SEO

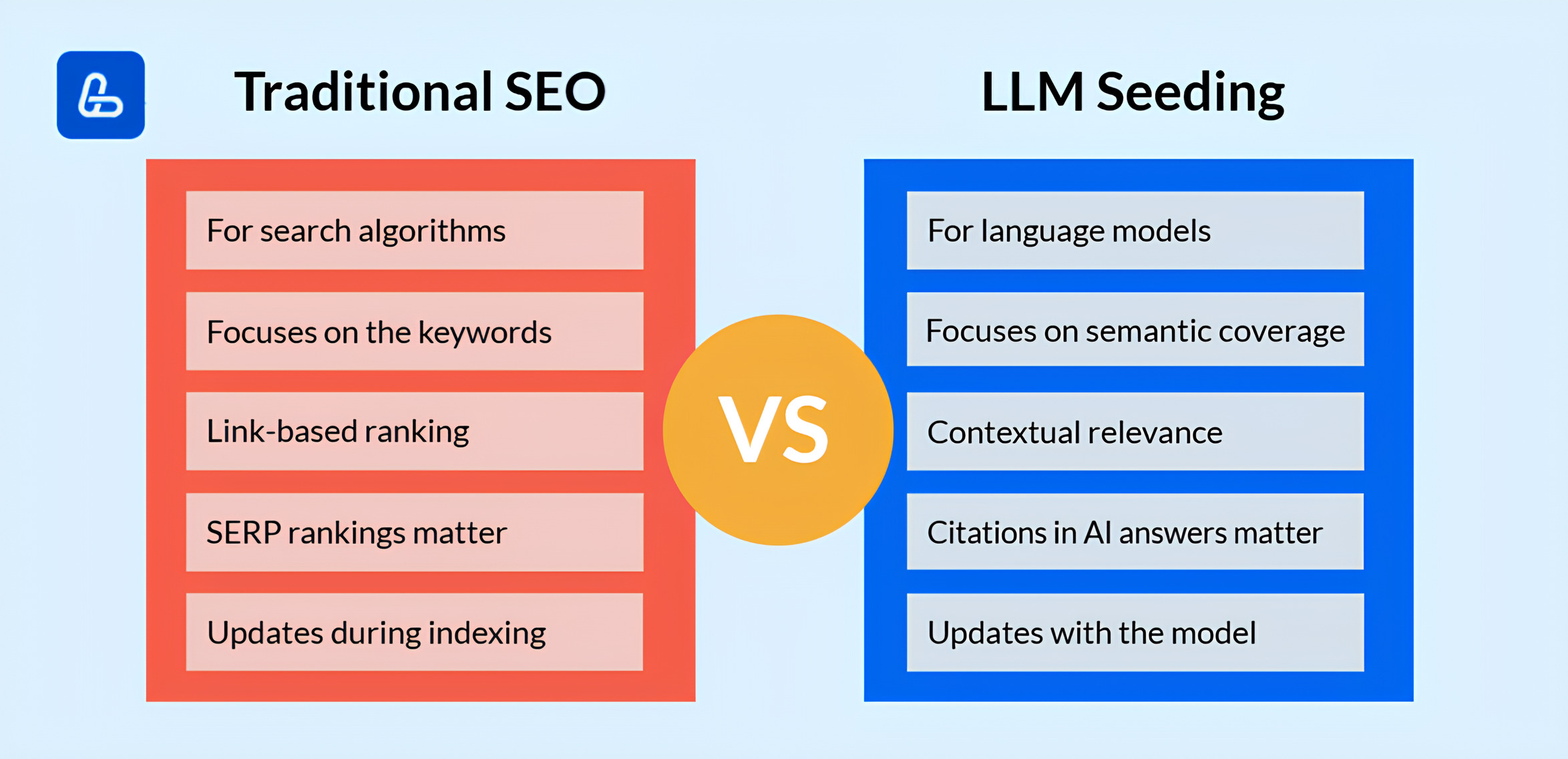

LLM seeding differs from traditional SEO in several ways:

LLM learning reveals a key insight: we're witnessing a fundamental shift in how information is searched and consumed. This requires rethinking traditional SEO methods.

A detailed comparison of traditional SEO and LLM seeding:

| Aspect | Traditional SEO | LLM Seeding |

|---|---|---|

| Target System | Search algorithms | Large language models |

| Key Metrics | SERP rankings, click-through rates | Frequency of AI response citations |

| Content Optimization | For keywords and search intent | For semantic connections and cognitive frameworks |

| Authority | Determined by backlinks and domain metrics | Determined by content quality and platform reputation |

| Result Updates | During indexing (days/weeks) | During model updates (months/years, rarely instant) |

| Presentation Format | 10 blue links + ad blocks | Direct answers, often without links |

| User Interaction | Requires site visits | Information consumed within AI interface |

Why LLM seeding is becoming the new SEO:

- Changing User Behavior. More people prefer instant AI answers over browsing multiple sites. According to Menlo Ventures, as of June 2025, 61% of U.S. adults used AI tools in the past six months, with nearly 20% using them daily. Globally, this translates to 1.7–1.8 billion users, with 500–600 million active daily.

- Declining CTR in Traditional Search. With zero-click results (answers displayed directly without links) and rising AI use, website visits from search results are decreasing.

- Long-Term Impact. Content in LLM training data influences responses until the next model update, often lasting longer than search engine rankings.

- Contextual Presence. Unlike search engines, where you appear only for relevant queries, AI responses can mention your brand in a wide range of contexts.

However, abandoning traditional SEO entirely is premature. The optimal strategy combines both approaches:

- Use traditional SEO for immediate traffic.

- Develop LLM seeding for long-term AI visibility.

- Adapt existing SEO content to meet LLM requirements.

- Monitor shifts in how your niche searches for information.

Top Platforms for Content Seeding

Official and Authoritative Websites

LLMs prioritize content from platforms with strict editorial control and high reputations, as these sources are deemed more reliable in training data, a key factor in LLM ranking.

Benefits of authoritative sites include:

- Greater weight in AI response generation.

- Higher chances of retention in knowledge bases during updates.

- Better preservation of authorship and attribution.

- More accurate representation of specific details.

Examples:

Wikipedia – the leading source of structured knowledge for LLMs, often used for definitions and factual data.

How to use: Create or edit articles related to your expertise, adhering to strict neutrality and verifiability rules. Direct promotion is prohibited, but subtle mentions of your company as an industry player or technology pioneer are acceptable.

Government and Educational Resources (.gov, .edu, academic journals) are highly reliable, especially for statistics and official data.

How to use: Publish research with universities, contribute to government reports, or provide expert commentary for official publications.

Major Media (BBC, The New York Times, Reuters) are used by LLMs for current events and trends.

How to use: Collaborate with journalists via HARO or direct outreach, offer expert commentary, and create newsworthy stories.

Industry-Leading Sites. Each niche has recognized thought leaders, like TechCrunch or Wired for tech, or HubSpot for marketing.

How to use: Submit guest posts, share unique research, or become a regular expert commentator.

Working with authoritative sites requires a long-term approach and high content standards, but it's the most reliable way to ensure your expertise appears in LLM responses for years to come.

Q&A Platforms

Q&A platforms allow precise targeting of questions your audience asks, increasing the likelihood of your expertise appearing in AI LLM responses.

Effective strategies for Q&A platforms include:

- Finding Relevant Questions with high views but low-quality answers.

- Crafting Comprehensive Answers with examples, data, and links to your resources where appropriate.

- Regular Community Participation to build authority, not just one-off responses.

- Earning Ratings and Reviews to establish credibility.

- Updating Old Answers to maintain relevance.

Examples:

Quora – a global platform with millions of users, where expert answers often make it into LLM training datasets.

How to use: Post answers yourself or use LinkBuilder.com for strategic placement. Their experts identify relevant Quora discussions, craft authoritative responses, and post them from trusted accounts.

Stack Overflow and other Stack Exchange sites – essential for technical topics, widely used in LLM training for programming and IT.

How to use: Ensure proper code formatting and technical details, as these impact how LLMs reproduce your information.

Reddit – while not a formal Q&A platform, many subreddits function similarly through question posts and comment responses.

How to use: Engage in discussions or use LinkBuilder.com for Reddit link placement. Their experts find relevant threads and post your links strategically.

Free large language models often train on such open sources, so high-quality Q&A responses have a strong chance of inclusion in their datasets.

Content Aggregators and Expert Platforms

These platforms are valuable for curating high-quality content likely to be included in LLM training datasets.

For maximum impact, publish:

- Research and Analytics with unique data unavailable elsewhere.

- Detailed Guides and Tutorials with step-by-step instructions.

- Case Studies with Specific Results and reproducible methodologies.

- Expert Opinions on Industry Trends to showcase authority.

Always support claims with data and authoritative sources to boost credibility for both readers and LLM guide algorithms.

Examples:

HARO (Help a Reporter Out) – connects journalists with experts, whose quotes often appear in media and LLM training data.

How to use: Submit expert responses or use LinkBuilder.com’s HARO service for automated monitoring and timely submissions.

Medium – a popular platform with a curation system that promotes high-quality content, increasing its chances of inclusion in LLM datasets.

How to use: Publish on your profile and in major Medium publications (e.g., The Startup, Better Programming) to maximize reach.

GitHub – a key source for technical documentation and open-source projects, used by LLMs for code-related training.

How to use: Create detailed ReadME files, wikis, and project documentation, highly valued for technical LLM training.

ResearchGate – a platform for academic papers, often used for training LLMs on scholarly topics.

How to use: Publish research papers or preprints with valuable insights to increase inclusion chances.

HackerNoon/Dev.to – specialized platforms for technical content, popular among developers.

How to use: Register via GitHub, Twitter, or email, post in Markdown with relevant tags, and engage with the community through comments and reactions.

Social Media and Forums

Social media and forums are vital for training LLMs on trends and public opinions.

Twitter (X) – authoritative users' threads are significant, especially for tech and business topics, often included in LLM datasets for modern language and trends.

How to use: Create detailed threads on your expertise, use hashtags for visibility, and engage with other experts to boost reach.

LinkedIn – ideal for B2B niches and professional content, with highly engaged posts often included in LLM datasets.

How to use: Share industry insights, research data, and trend analysis to add value to business-focused LLMs.

Specialized Forums (e.g., Indie Hackers for startups, Hacker News for tech, Product Hunt for new products) provide niche expertise for LLMs.

How to use: Add products, post updates, and engage with the community to promote innovative solutions and networking.

Engagement is critical on social platforms. LLMs prioritize content with high interaction (likes, comments, shares) as it signals value.

To maximize effectiveness:

- Encourage Discussion – Ask open-ended questions and invite experiences.

- Respond to Comments – Increase engagement.

- Use Visuals – Attract more attention.

- Post at Optimal Times – When your audience is most active.

- Join Trending Discussions – Add your expertise to boost visibility.

News and Analytical Resources

News and analytical resources shape LLMs' understanding of events, trends, and facts.

Examples:

Bloomberg, Forbes – primary sources for financial and business data, often used by LLMs for economics and market queries.

How to use: Offer expert commentary, publish guest columns, or participate in their research and surveys.

TechCrunch, Wired – authoritative tech and startup sources, increasing the likelihood of inclusion in LLM responses about industry innovations.

How to use: Create newsworthy stories through product launches, research, or unique data to attract tech journalists.

VentureBeat, The Verge – key for tech, business, and culture intersections, accepting submissions via "Submit a Story" or editorial contact.

How to use: Prepare unique, well-researched content with expert insights or exclusive data, and engage in post-publication discussions.

Industry-Specific Media – Each niche has authoritative outlets, like MarketingLand for marketing or The Lancet for medicine.

How to use: Collaborate actively with relevant publications.

Key considerations for news resources:

- Timeliness – Offer insights on hot industry topics while they're relevant.

- Data and Research – Journalists value exclusive data for mentions.

- Consistency – Build long-term relationships with key outlets.

- Multimedia – Include infographics, videos, and interactive elements.

Presence in authoritative news outlets is critical for fast-evolving topics, as developers often update LLM datasets with fresh content from trusted media to keep models current.

Optimizing Content for Language Models

Structure and Style for Maximum Reach

How to use large language models in marketing starts with proper content structure. LLMs better process and "remember" well-organized, logical content, a key part of getting started with LLM.

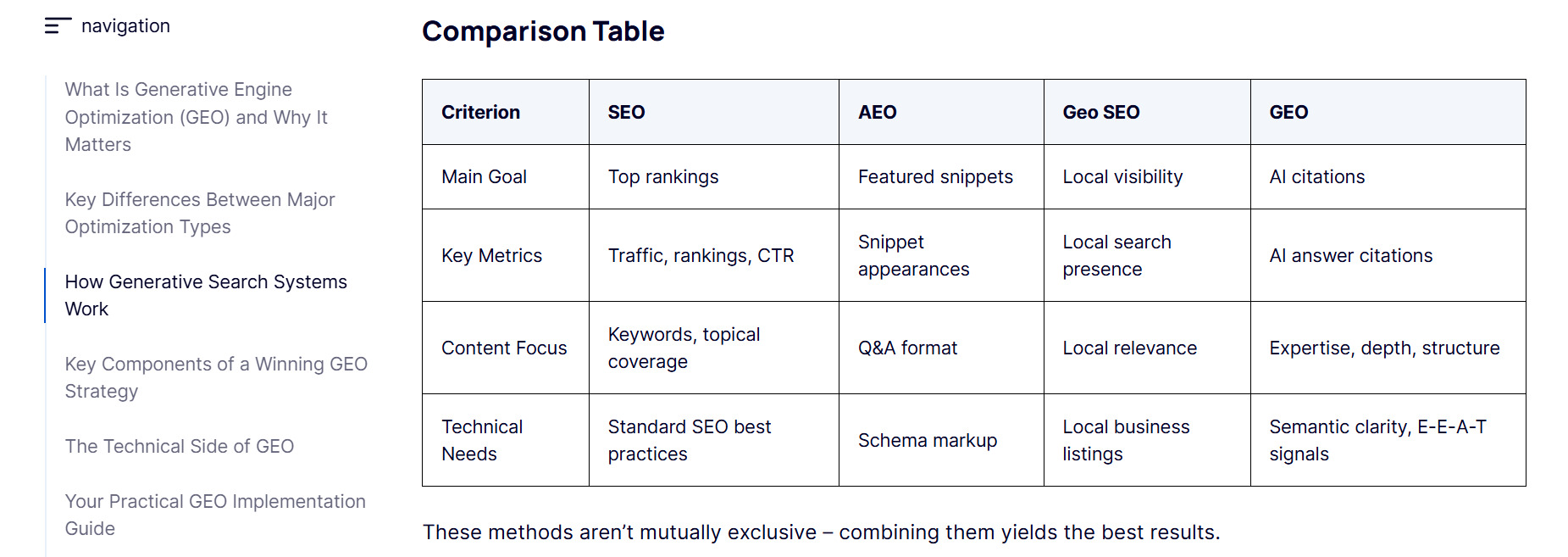

Our article Generative Engine Optimization (GEO): How to Promote Sites in the AI Era illustrates key structural elements LLMs favor:

Clear Heading Hierarchy (H1-H4). This helps AI understand the importance and relationships of sections. Headings should precisely reflect the content, avoiding vague or overly creative titles, as models prefer specificity.

Bulleted and Numbered Lists. These organize information for easy digestion by both humans and AI.

Use lists for:

- Process steps

- Feature descriptions

- Problem-solving options

FAQ Blocks. These align with the "query-response" format LLMs use, providing direct answers to common questions.

Tables. These effectively structure comparative data, which LLMs increasingly understand and cite accurately.

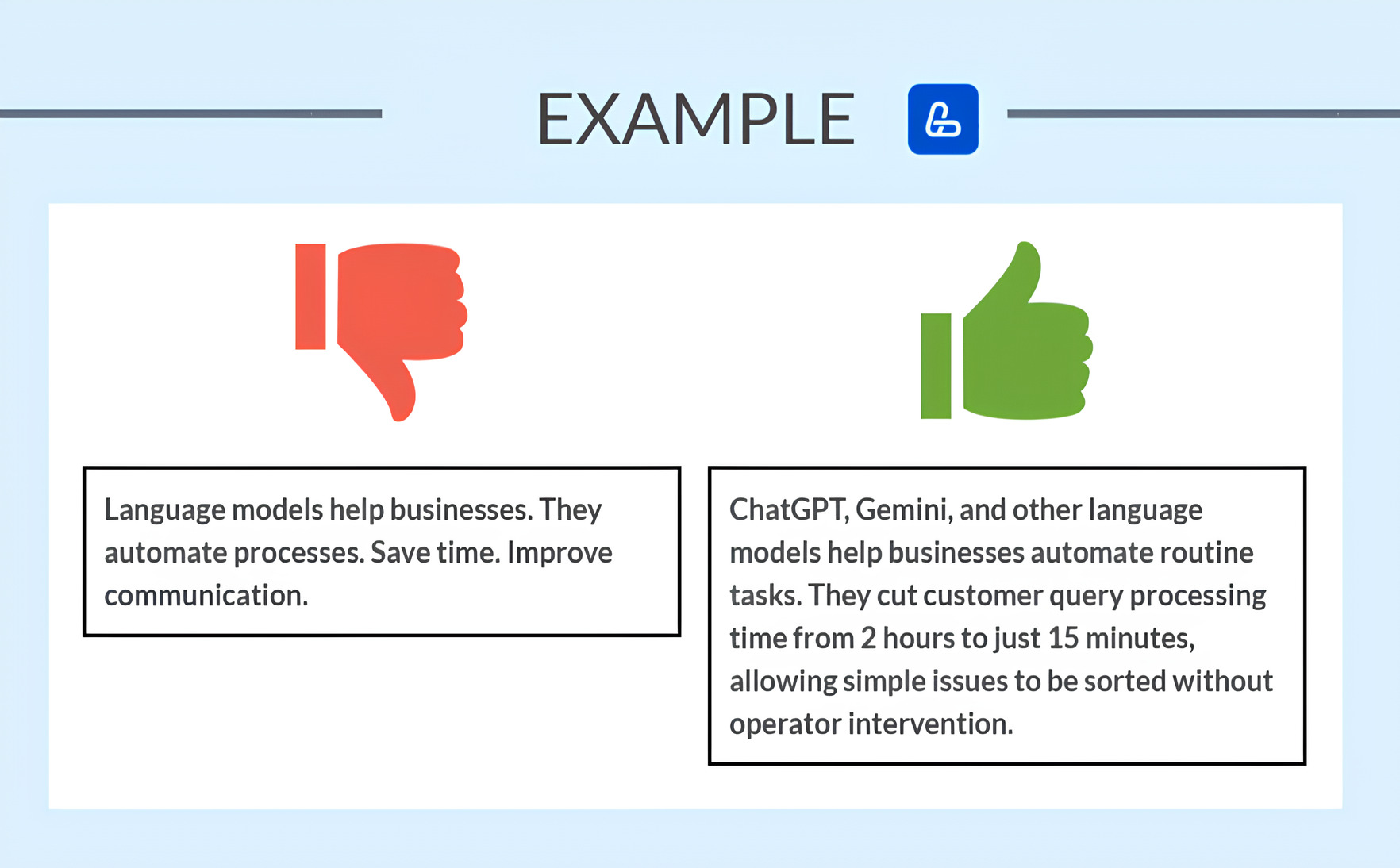

Medium-Sized Paragraphs. LLMs better process paragraphs of 3–5 sentences with a clear main idea than lengthy texts or short, contextless snippets.

For style, to maximize LLM basics impact:

- Use Industry Terminology with explanations for non-experts to establish expertise while maintaining accessibility.

- Include Synonyms and Related Terms. For example, mention "content seeding," "content placement," and "data inclusion" to broaden the semantic field and increase response visibility.

- Use Natural Language without keyword stuffing, as LLMs detect unnatural SEO-driven texts.

- Maintain Logical Flow between sentences and paragraphs for better comprehension and reproduction by LLMs.

- Emphasize Factual Information – numbers, dates, names, and statistics are perceived as authoritative.

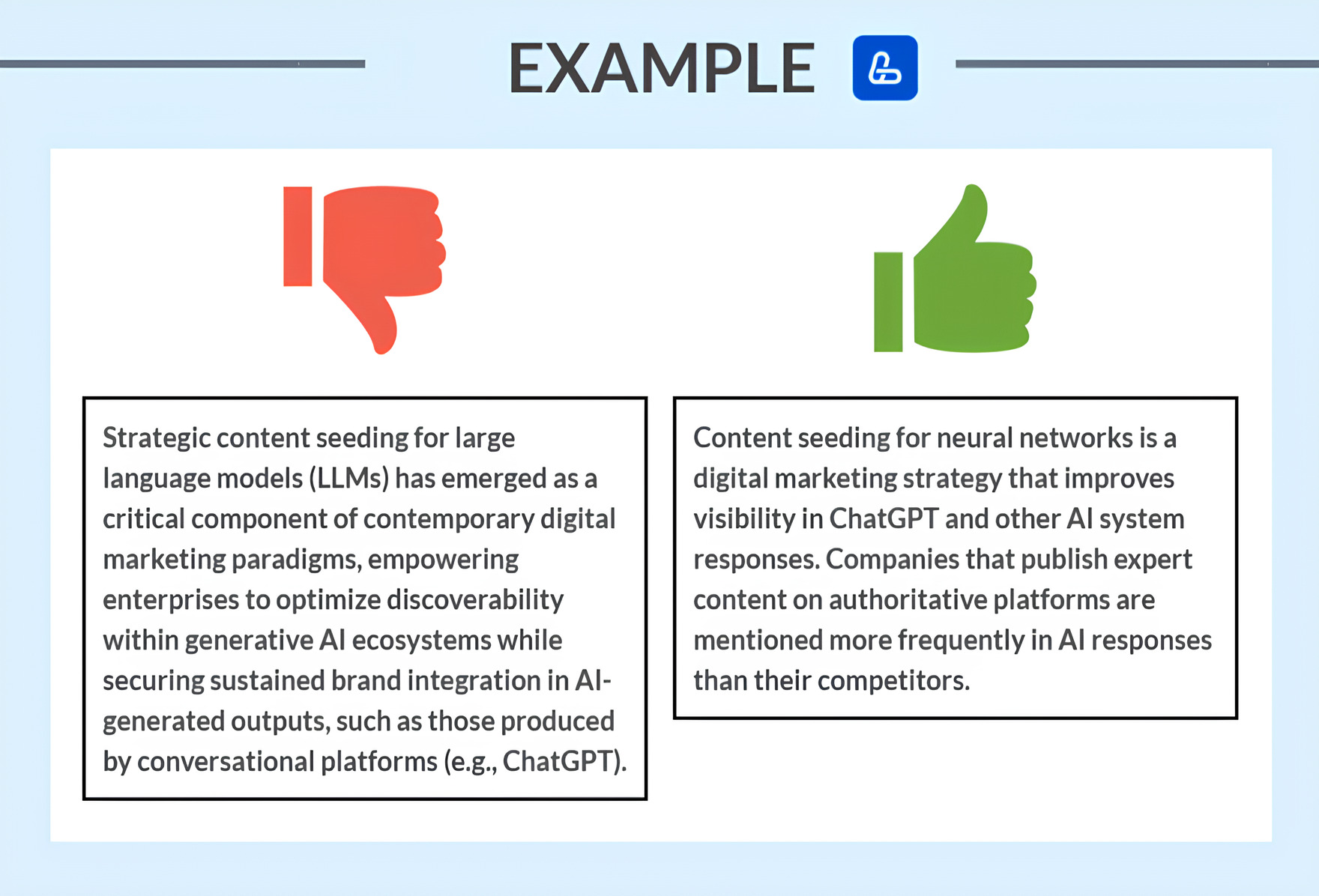

Example of an LLM-optimized paragraph:

Visual Elements and Their Role

Visuals are increasingly vital for large language model explained strategies, as multimodal LLMs (e.g., GPT-4V, Gemini, Claude 3) can interpret images.

Graphs and Charts effectively present statistics and trends. For LLMs, ensure:

- Clear axis labels

- A legend explaining all elements

- A title summarizing the data

Diagrams and Infographics clarify complex processes, ideal for explaining how to make an LLM or technical concepts.

Screenshots with Instructions work well for tutorials, such as LLM guide content, showing real interfaces with annotations.

While traditional LLMs focus on text, image captions are critical:

- Alt-Text – A concise, accurate description of the image, indexed by search engines and used in LLM training.

- Captions – Go beyond stating the obvious (e.g., "This is a graph") to add context, like "Graph showing AI marketing growth from 2022–2023."

- Contextual Integration – Text around images should connect logically, helping LLMs link visuals to content.

How visuals enhance AI context:

- Fact Validation – Visuals supporting text increase perceived reliability.

- Semantic Expansion – Captions add terms and connections to your content's semantic network.

- Structural Markers – Visuals act as "anchor points" for LLMs to navigate document structure.

Practical tips for visuals:

- Use original images to boost uniqueness.

- Add branding to charts and infographics for attribution.

- Ensure readable text in images for scanning and recognition.

- Pair complex data with visualizations for accurate LLM interpretation.

How does an LLM work with visuals? Multimodal models process images via computer vision, linking them to textual context for comprehensive understanding, even in free large language models.

Challenges and Limitations of Working with LLMs

Common Challenges in Seeding

Several practical challenges arise when planning seeding strategies:

Competition for Limited Dataset Slots. Developers can't include the entire internet due to:

- Computational limits

- Training costs (each gigabyte increases expenses)

- Need to filter low-quality content

This creates a "battle for attention" among content creators, intensified as more companies recognize the importance of LLM seeding, raising the question "Why does seeding take so long".

Information Obsolescence. LLMs update periodically, not continuously, creating knowledge gaps. This requires:

- Evergreen content that remains relevant long-term.

- Regular updates to stay included in new model versions.

Lack of Transparency. Unlike search engines with webmaster guidelines, LLM developers rarely disclose exact criteria for data inclusion.

Attribution Loss. LLMs often cite information without crediting sources, reducing branding benefits. Counter this by:

- Embedding brand mentions in key information.

- Creating unique terms or frameworks tied to your brand.

- Publishing on multiple authoritative platforms to strengthen brand association.

Language and Cultural Barriers. Most LLMs are trained on English-heavy content, disadvantaging non-English materials, a challenge for free large language models.

Platform Restrictions. Key platforms have strict rules:

- Wikipedia demands neutrality and verifiability.

- GitHub focuses on code and documentation, not marketing.

- Academic journals accept only peer-reviewed research.

Measuring Effectiveness. Unlike SEO with clear metrics (rankings, traffic, conversions), LLM seeding success is hard to quantify. Testing queries in models helps, but full visibility is elusive.

Evolving Algorithms. LLM training methods change constantly, rendering current strategies potentially obsolete. Early adopters of systematic LLM seeding gain a significant edge in the AI-driven information landscape.

Ethical Considerations

LLM learning involves ethical considerations at the intersection of technology, marketing, and social responsibility.

Misinformation. LLMs can inadvertently spread false information from training data. To seed ethically:

- Verify all factual claims.

- Cite sources for controversial topics.

- Avoid exaggerations or broad generalizations.

- Update content with new data.

AI Response Manipulation. Some companies attempt to "game" LLMs to promote products or suppress negative information, undermining trust and prompting stricter filters.

Bias and Diversity. Consider:

- Inclusive terminology and examples.

- Diverse perspectives.

- Avoiding stereotypes and generalizations.

Intellectual Property. How LLMs work often involves rephrasing existing content, raising copyright and attribution issues.

Transparency. Be open about intentions:

- Avoid fake profiles for content distribution.

- Don't disguise ads as independent research.

- Disclose commercial interests.

Ethical guidelines for how to create LLM content:

- Create Value – Focus on audience benefit, not just promotion.

- Follow Platform Rules – Adhere to site terms.

- Be Transparent – Clearly identify yourself and interests.

- Respect Privacy – Exclude personal data without consent.

- Aim for Long-Term Impact – Avoid short-term tactics that erode trust.

Ethical seeding is not only morally sound but also sustainable, as LLM developers improve anti-manipulation filters.

Step-by-Step Guide: Making Your Site an AI Source

Step 1: Analyze Current Content for LLM Compatibility

To make your site an AI source, start with a content audit to assess LLM basics compatibility.

Evaluate:

- Factual Density – Count facts, figures, dates, and examples per page. LLMs favor information-rich content with verifiable claims.

- Structural Clarity – Check for:

- Logical heading hierarchy (H1-H4).

- Lists and enumerations where appropriate.

- Tables for comparative data.

- Subheadings dividing text into logical blocks.

- Topic Completeness – Ensure content fully addresses audience questions.

- Uniqueness and Originality – Verify unique research, case studies, or insights.

- Information Timeliness – Identify outdated data or recommendations.

- Stylistic Clarity – Avoid complex jargon, overly long sentences, or unclear text.

Test by querying LLMs (ChatGPT, Claude, Bard, DeepSeek) with niche-specific questions and comparing responses to your content. Inclusion in AI answers is a positive sign.

Analyze competitors in AI responses to identify gaps in your content.

Tools for analysis:

- AI Ranker for visibility and ranking.

- Hemingway App, Readability for text clarity.

- Ahrefs, Semrush for semantic core and structure.

- Copyscape, Plagiarism Checker for uniqueness.

Create a prioritized list of materials needing optimization based on LLM potential and required changes.

Step 2: Optimize Structure and Semantics

How to learn LLM preferences? Apply structure and semantic recommendations practically.

Structural Optimization:

- Implement Clear Heading Hierarchy:

- H1: Main topic (one per page).

- H2: Major sections.

- H3: Subsections.

- H4: Optional subpoints.

- Refine Long Paragraphs:

- Break into 3–5 sentence chunks.

- Add subheadings for logical blocks.

- Convert enumerations to lists.

- Add Structural Elements:

- "Key Points" or "Important Notes" blocks.

- FAQ sections with direct answers.

- Summaries for complex sections.

Semantic Optimization:

- Expand Semantic Core:

- Include synonyms and related terms.

- Cover associated concepts.

- Use varied phrasing for key ideas.

- Strengthen Factual Base:

- Add specific numbers, stats, and dates.

- Cite authoritative sources.

- Include real-world examples.

- Enhance Readability:

- Use active voice over passive.

- Shorten sentences where possible.

- Replace jargon with clear terms, maintaining accuracy.

Example of an optimized paragraph:

Technical Optimization:

- Metadata:

- Optimize title, description, and image alt-text.

- Include key terms in URLs.

- Use Schema markup for structured data.

- Internal Linking:

- Add cross-links to related content.

- Create glossaries for technical terms.

- Include detailed tables of contents for long articles.

Balance optimization for LLMs with human readability to avoid keyword-heavy, unnatural text.

Step 3: Place Content on AI-Priority Platforms

After optimizing your content, strategically place it on external platforms. Follow this plan:

- Identify Priority Platforms based on your niche (see above for examples).

- Adapt Content to platform formats:

- GitHub: Markdown documentation.

- Medium: Long-form articles with visuals.

- Quora: Detailed Q&A responses.

- Create a Publication Plan:

- Schedule regular posts to build authority.

- Start with high-priority platforms.

- Align with industry events or trends.

- Enhance Cross-Platform Presence:

- Link publications across platforms.

- Maintain consistent terminology and concepts.

- Ensure uniform brand representation.

- Secure Social Proof:

- Encourage comments and discussions.

- Promote sharing on social media.

- Gain endorsements from industry experts.

LLM seeding is about creating a cohesive informational ecosystem where your expertise is validated by multiple authoritative sources, maximizing inclusion in large language models datasets.

For professional placement, use LinkBuilder.com, which specializes in strategic seeding for LLMs, offering:

- Expert Q&A responses on Quora.

- HARO submissions for media citations.

- Reddit and forum discussion participation.

- Guest posts on authoritative industry sites.

Step 4: Regular Updates and Expansion

LLM seeding is an ongoing process, as models value fresh, updated content but update periodically, explaining why seeding takes so long.

Audit Outdated Content for:

- Obsolete statistics.

- Changed technologies or methods.

- Irrelevant examples or case studies.

Recommended frequency: quarterly for fast-changing industries (tech, marketing), biannually for stable ones.

Content Updates:

- Refresh Statistics and Data:

- Replace old figures with current ones.

- Add new research or reports.

- Note update dates.

- Expand Examples and Cases:

- Include new success stories.

- Update existing case results.

- Add modern use cases.

- Reflect New Trends:

- Cover recent developments.

- Adapt recommendations to new conditions.

- Comment on significant industry events.

Expand Semantic Reach:

- Add sections on related topics.

- Deepen existing topics with details.

- Address new FAQs.

Maintain Cross-Platform Consistency:

- Sync updates across your site and external platforms.

- Refresh previously published external content.

- Create new materials for updates.

Updates may not immediately appear in AI responses due to periodic training cycles.

Tips:

- Create new content based on updates.

- Use phrases like "As of August 2025…" for temporal clarity.

- Tag updated materials with "Updated" and dates.

- Archive old versions to track changes.

Evergreen content with regular updates balances long-term relevance with fresh data.

Step 5: Monitor Indexing and Adjust Strategy

Track results and refine your seeding strategies:

- Test Queries – Regularly ask niche-specific questions to LLMs or use AI Ranker.

- Track Attribution – Check if your brand, product, or expert is mentioned in relevant responses.

- Analyze Wording – Look for your unique phrases or terms in AI answers.

- Monitor Backlinks – Track links to your content from authoritative sites.

Interpret Results and Adjust:

- Analyze Successful Content:

- Which topics appear most in LLM responses?

- Which formats perform best?

- Which platforms host frequently cited content?

- Identify Gaps:

- Which queries miss your content?

- Which topic aspects are underdeveloped?

- Why are competitors cited more?

- Refine Strategy:

- Increase presence on effective platforms.

- Adjust content format or structure.

- Expand the semantic core based on AI responses.

Seeding strategy optimization cycle:

Key metrics:

- Mention Frequency – How often your brand/expert appears in responses.

- Attribution Accuracy – Correctness of information cited.

- Topic Coverage – Range of queries featuring your content.

- Conversion – Traffic from AI mentions (hard to track).

LLM seeding is a long-term effort, with results compounding through systematic content improvement.

The Future of Content Seeding: Trends and Predictions

The world of large language models evolves rapidly, and seeding strategies must adapt. Today's LLM basics may shift with technological breakthroughs.

Multimodal Models (text, images, video) will dominate. Models like GPT-4V, Gemini, and Claude 3 already process images, with video and audio capabilities emerging.

Seeding implications:

- Multi-format content (text, visuals, video) will become standard for higher trust and informativeness.

- Visual optimization (structure, captions, alt-text) will rival text in importance.

- Audio content (podcasts, interviews) will gain value via transcription and speech analysis.

Personalized AI Responses. LLMs will tailor answers to users, enabling:

- Targeted content for diverse demographics and psychographics.

- Multi-level materials for beginners and experts.

- Tone and style adaptation for various use cases.

Real-Time Training and Updates. Future models may update continuously, increasing the value of:

- News and timely data.

- Industry event commentary.

- Regularly updated resources.

Source Verification. Growing concerns about reliability will prioritize content:

- Validated by independent sources.

- Published by verified experts.

- Linked to primary sources.

Localization and Cultural Context. Next-generation LLMs will better handle regional and cultural nuances, emphasizing:

- Region-specific content.

- Cultural sensitivity.

- Multilingual representation.

Integration with Other Technologies. LLMs will join ecosystems involving:

- IoT for contextual data.

- AR for data visualization.

- Blockchain for content authorship verification.

Ethical Standards and Regulation. Stricter standards will address misinformation and manipulation, requiring:

- Transparent content creation and distribution.

- Certified content for critical fields.

- Clear fact-opinion separation.

How to make a LLM content strategy future-proof? Focus on multi-format, factually accurate, expert-driven content that's easily verifiable. This ensures long-term sustainability amid technological changes.